Trustable Machine Learning Systems

Jay Morgan

30th March 2021

1 - The Good, the bad, and the ugly

2 - Machine Learning at its "Good"

3 - Machine Learning at its "Ugly"

4 - Machine Learning at its "Bad"

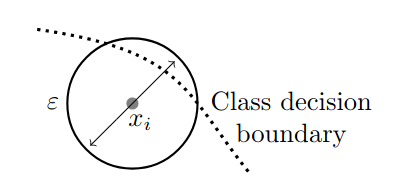

Figure 4: Huang, X., Kwiatkowska, M., Wang, S., & Wu, M. (2017, July). Safety verification of deep neural networks. In International conference on computer aided verification (pp. 3-29). Springer, Cham.

5 - A life with formal methods

6 - What's in todays talk

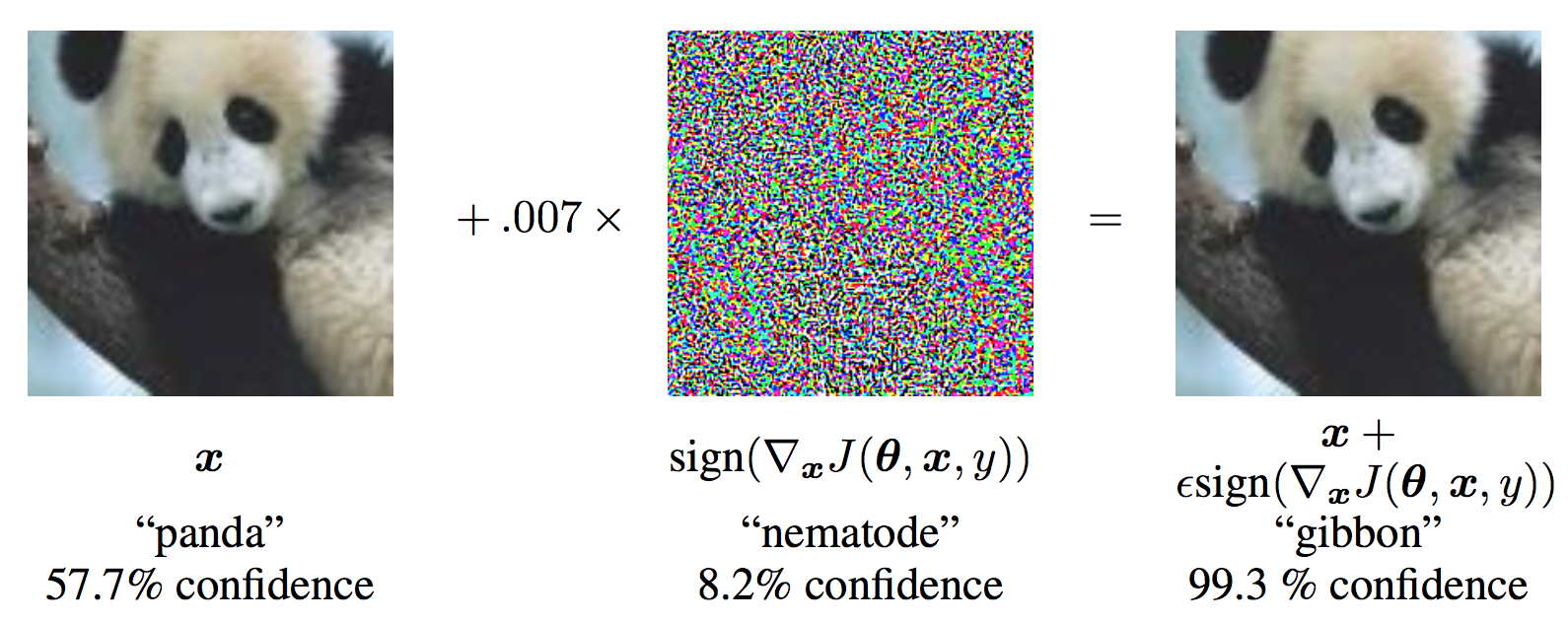

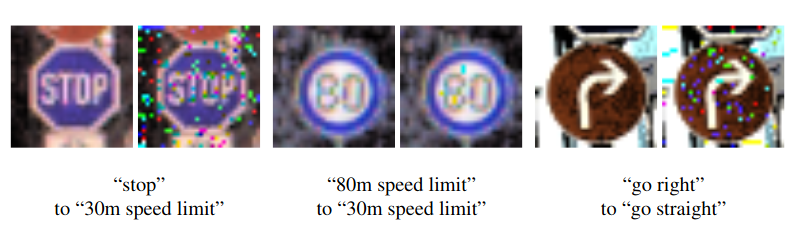

- Explanation of Adversarial examples

- Defining the upper-bounds on where to search for these examples

- Creating Neural Networks and searching for adversarial examples using satisfiability theories. How this can be implemented to enable verification of Neural Network properties.

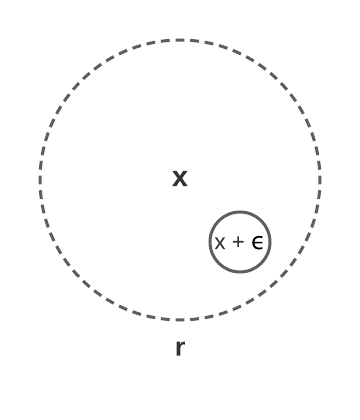

7 - Adversarial examples (mathematical formalisation)

Given some classifier model

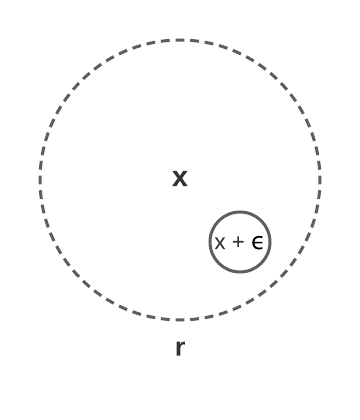

8 - How do we choose an r

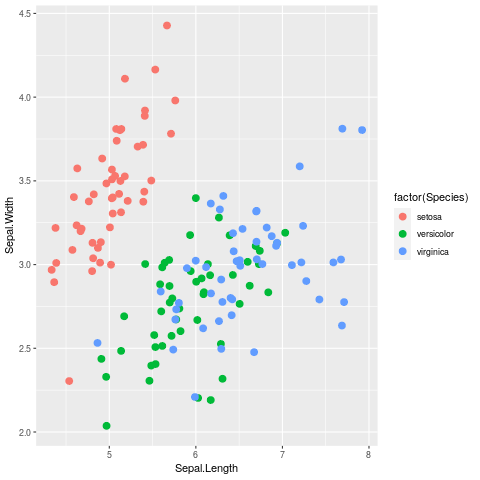

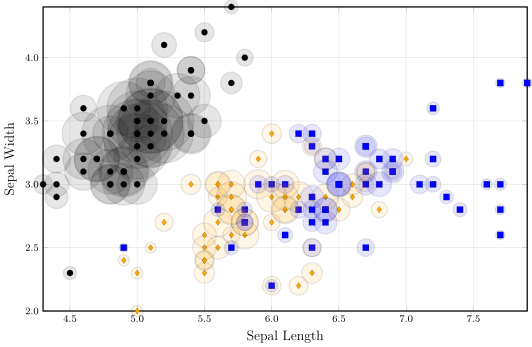

9 - Less clear with non-image data

Iris dataset - classifier aim: predict type of flower from 4 dimensional vector of

Sepal Length, Sepal Width, Petal Length, and Petal Width.

I.e.

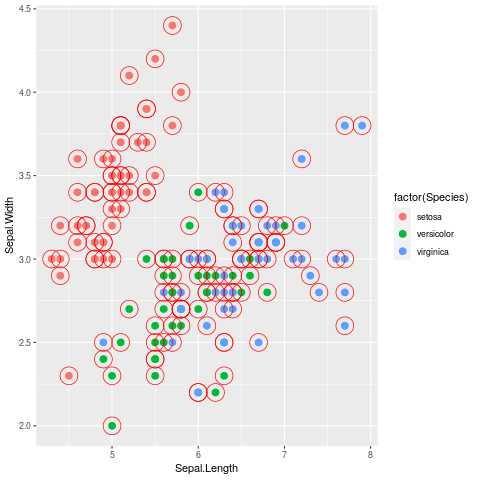

10 - Applying a 'small' r can lead to overlaps of true class boundaries

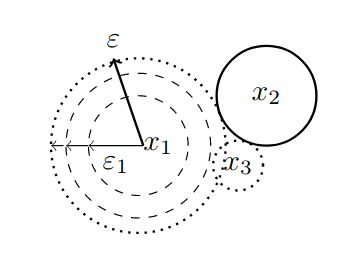

11 - Generate a individual r for each data point

Figure 10: geometric complexity of class boundaries

Figure 11: sparsity/density of sampling from data manifold that consistutes the training data.

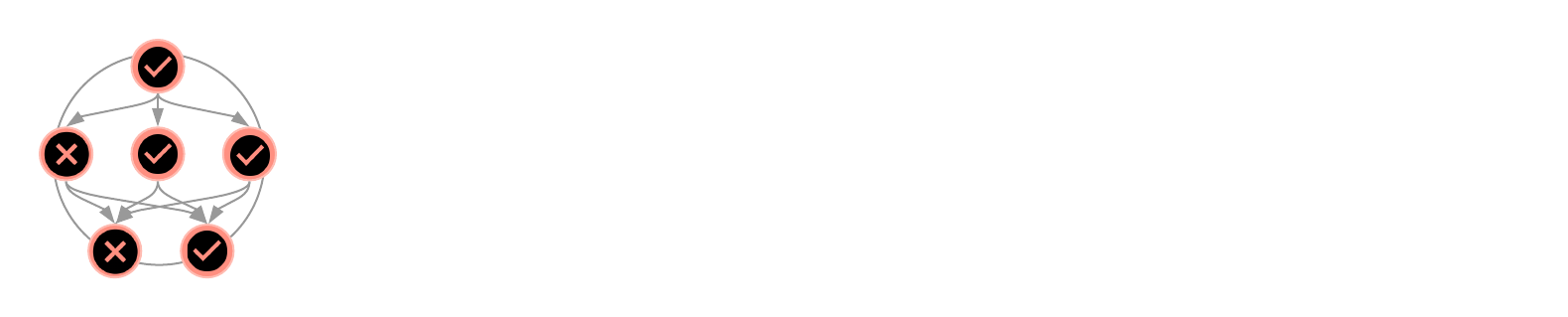

12 - Iterative expansion

In our method we provide an algorithm to iteratively expand the maximum

13 - Modulating by density

Expansion is modulated by the estimated density of data samples. Using an inverse multiquadric radial basis function (RBF) to estimate the density at a given location.

The estimated density for a single point is the sum of RBFS, centered on each point, at this location.

14 - Final result: individual r value for each data point

Morgan, J., Paiement, A., Pauly, A., & Seisenberger, M. (2021). Adaptive neighbourhoods for the discovery of adversarial examples. arXiv preprint arXiv:2101.09108.

15 - Now we must find x+ϵ

16 - Searching for the existence of adversarial examples

https://github.com/jaypmorgan/NeuralVerifier.jl - built on top of Z3 solver to provide an interface to verify Neural Network properties, such as: output bounds checking and adversarial robustness.

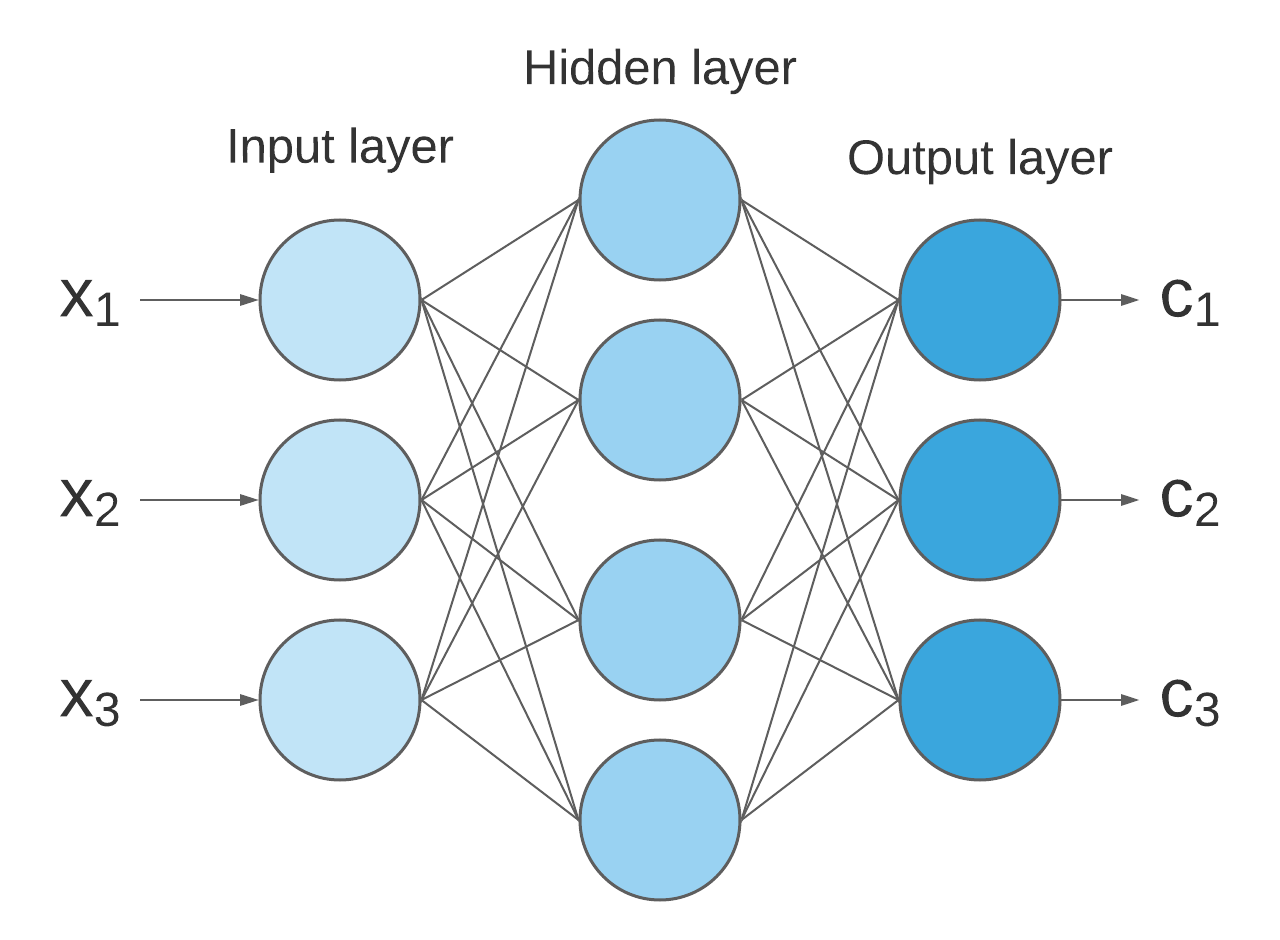

17 - Application of using NeuralVerifier

Take a very simple example of a 3-layer neural network.

18 - Simple Arithmetic

Where

19 - Encoding arithmetic

Z3 provides support for real linear arithmetic and provides operations for the basic multiplication and addition. Thus, we need only to apply these elementwise.

function dense(x, W, b)

out = fill!(Array(undef, size(W,1), size(x,2)), 0)

for i = 1:size(out,1), j = 1:size(W,2)

out[i] += W[i,j] * x[j]

end

out = out .+ b

return out

end

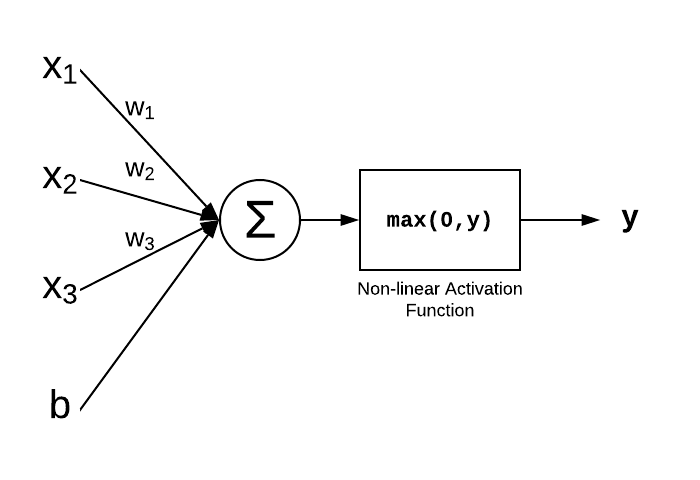

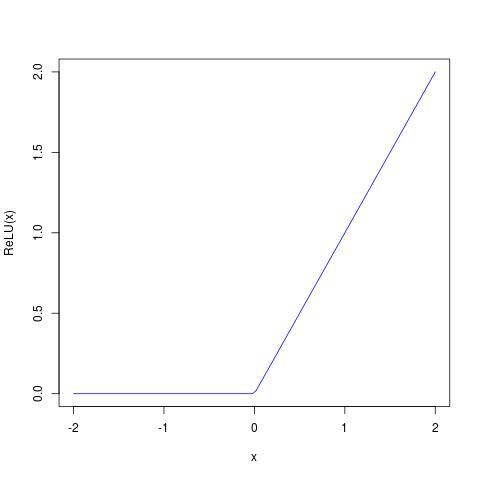

20 - ReLU

Moving onto non-linear functions, we must consider how such non-linearities are encoded in the model. For some of the activation functions, it could be as easy as simple boolean logic.

If(x > 0, x, 0)

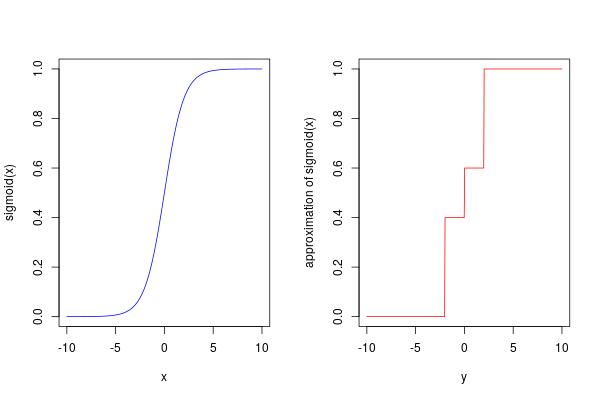

21 - More complex Sigmoid function (using piecewise linear approximation)

function sigmoid(x)

If(x < 0,

If(x < -2, 0.0, 0.4),

If(x > 2, 1.0, 0.6))

end

22 - Putting together a simple layer

function dense(x, W, b)

out = fill!(Array(undef, size(W,1), size(x,2)), 0)

for i = 1:size(out,1), j = 1:size(W,2)

out[i] += W[i,j] * x[j]

end

out = out .+ b

return out

end

function relu(x)

If(x > 0, x, 0)

end

y = relu(dense(x, W, b))

23 - Building an entire model with NeuralVerifier

encoding(x) = begin

y = dense(x,

neural_network[1].W,

neural_network[1].b) |> relu;

y = dense(y,

neural_network[2].W,

neural_network[2].b) |> relu;

y = dense(y,

neural_network[3].W,

neural_network[3].b) |> softmax;

end

24 - Setting up search for adversarial examples

for (idx, (x_i, r_i)) in enumerate(zip(x, r))

m = Optimize() # create an optimisation procedure (model)

add!(m, (eps > 0) ∧ (eps <= r_i)) # bound condition on epsilon

y = encoding(x_i) # get initial condition of y in our encoding

add!(m, y != f(xi)) # add the adversarial example condition

minimize!(m, eps) # find the smallest eps

check(m) # check for satisfiability

m.is_sat == "sat" && @info "#$(idx): Adversarial found!"

end

25 - In NeuralVerifier

r = epsilon_expand(x_train', y_train;

ϵ = 1e-7, # the initial step size

ε = 1.0, # RBF width parameter

func = inverse_multiquadric, # RBF function to use

decay = exponential_decay) # Decay function based on density

stable_area, adv_examples = stable_region(Optimize, f, x_train', r;

timeout = 100,

build_fn = encoding)

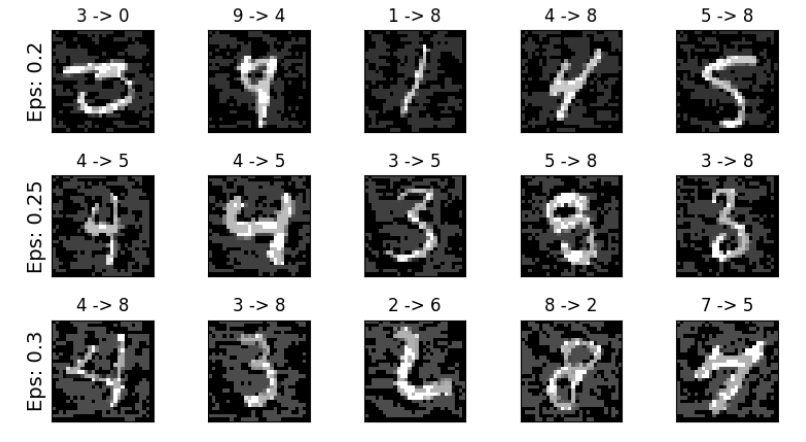

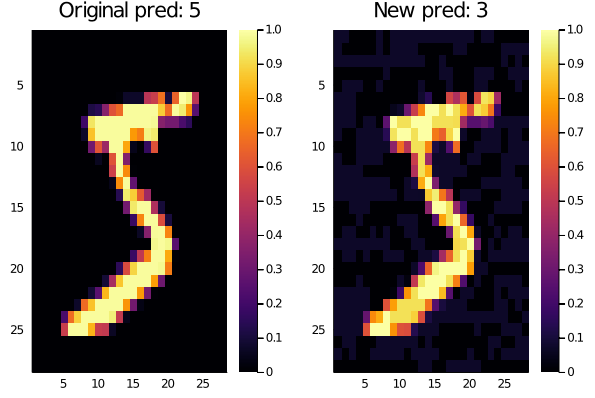

26 - Adversarial Examples Found!

Running on MNIST dataset.

[ Info: #1: Adversarial found! [ Info: #3: Adversarial found! [ Info: #4: Adversarial found! [ Info: #5: Adversarial found! [ Info: #7: Adversarial found! ...

27 - Main contributions

- Using knowledge gleamed from the data manifold to generate individual

r value for each data point. - Open-source platform for verification of Neural Network properties using SMT solvers

28 - A thank you to my supervisors

- Monika Seisenberger (Swansea University)

- Jane Williams (Swansea University)

- Adeline Paiement (Université de Toulon)

29 - Contributions welcome!

You can find these slides on my personal website below. Additionally follow the github link for more documentation and usage on NeuralVerifier.jl